This post is a part of the DP-600: Implementing Analytics Solutions Using Microsoft Fabric Exam Prep Hub; and this topic falls under these sections:

Prepare data

--> Transform data

--> Convert column data types

Converting data types is a fundamental transformation task in data preparation. It helps ensure data consistency, accurate calculations, filter behavior, sorting, joins, and overall query correctness. In Microsoft Fabric, data type conversion can happen in Power Query, SQL, or Spark depending on the workload and where you are in your data pipeline.

This article explains why, where, and how you convert data types in Fabric, with an emphasis on real-world scenarios and exam relevance.

Why Convert Data Types?

Data type mismatches can lead to:

- Erroneous joins (e.g., joining text to numeric)

- Incorrect aggregations (e.g., sums treating numbers as text)

- Filtering issues (e.g., date strings not filtering as dates)

- Unexpected sort order (e.g., text sorts differently from numbers)

In analytics, getting data types right is critical for both the correctness of results and query performance.

Common Data Types in Analytics

Here are some common data types you’ll work with:

| Category | Examples |

| Numeric | INT, BIGINT, DECIMAL, FLOAT |

| Text | STRING, VARCHAR |

| Date/Time | DATE, TIME, DATETIME, TIMESTAMP |

| Boolean | TRUE / FALSE |

Where Data Type Conversion Occurs in Fabric

Depending on workload and tool, you may convert data types in:

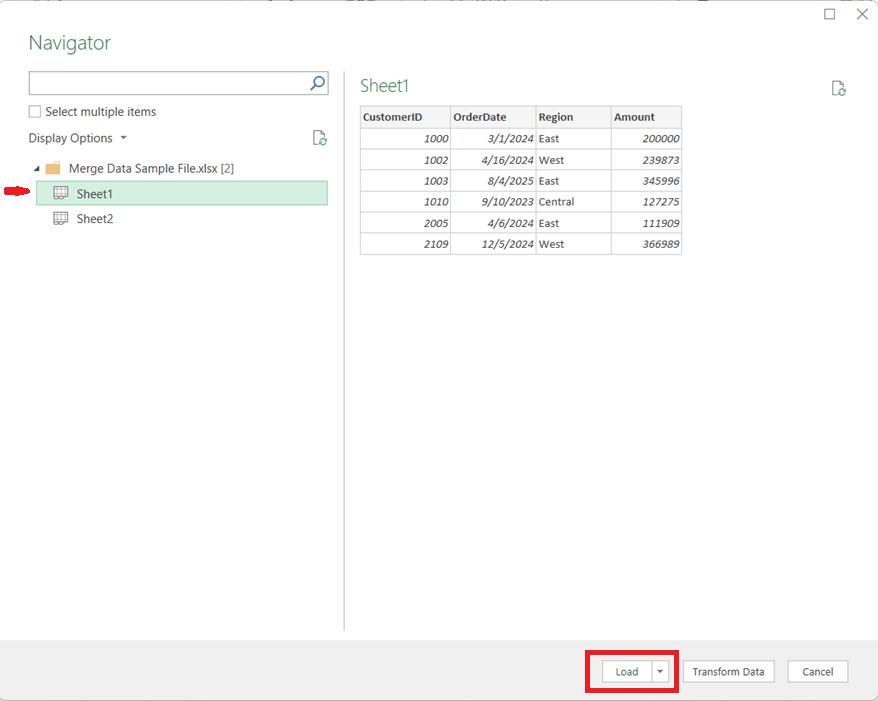

Power Query (Dataflows Gen2 & Lakehouses)

- Visual change type steps (Menu → Transform → Data Type)

- Applied steps stored in the query

- Useful for low-code transformation

SQL (Warehouse & Lakehouse SQL Analytics)

- CAST, CONVERT, or TRY_CAST in SQL

- Applies at query time or when persisting transformed data

Spark (Lakehouse Notebooks)

- Explicit schema definitions

- Transformation commands like withColumn() with type conversion functions

Each environment has trade-offs. For example, Power Query is user-friendly but may not scale like SQL or Spark for very large datasets.

How to Convert Data Types

In Power Query

- Select the column

- Go to Transform → Data Type

- Choose the correct type (e.g., Whole Number, Decimal Number, Date)

Power Query generates a Change Type step that applies at refresh.

In SQL

SELECT

CAST(order_amount AS DECIMAL(18,2)) AS order_amount,

CONVERT(DATE, order_date) AS order_date

FROM Sales;

- CAST() and CONVERT() are standard.

- Some engines support TRY_CAST() to avoid errors on incompatible values.

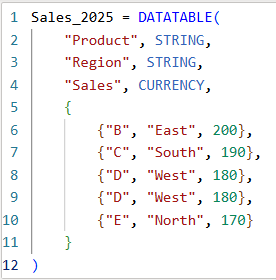

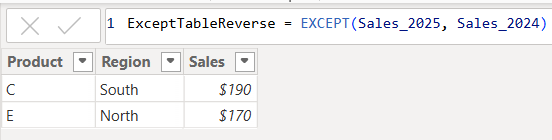

In Spark (PySpark or SQL)

PySpark example:

df = df.withColumn(“order_date”, df[“order_date”].cast(“date”))

SQL example in Spark:

SELECT CAST(order_amount AS DOUBLE) AS order_amount

FROM sales;

When to Convert Data Types

You should convert data types:

- Before joins (to ensure matching keys)

- Before aggregations (to ensure correct math operations)

- Before loading into semantic models

(to ensure correct behavior in Power BI) - When cleaning source data

(e.g., text fields that actually represent numbers or dates)

Common Conversion Scenarios

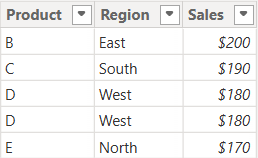

1. Text to Numeric

Often needed when source systems export numbers as text:

| Source | Target |

| “1000” | 1000 (INT/DECIMAL) |

2. Text to Date/Time

Date fields often arrive as text:

| Source | Target |

| “2025-08-01” | 2025-08-01 (DATE) |

3. Numeric to Text

Sometimes required when composing keys:

CONCAT(customer_id, order_id)

4. Boolean Conversion

Often used in logical flags:

| Source | Target |

| “Yes”/”No” | TRUE/FALSE |

Handling Conversion Errors

Not all values convert cleanly. Options include:

- TRY_CAST / TRY_CONVERT

- Returns NULL instead of error

- Error handling in Power Query

- Replacing errors or invalid values

- Filtering out problematic rows

- Before casting

Example:

SELECT TRY_CAST(order_amount AS DECIMAL(18,2)) AS order_amount

FROM sales;

Performance and Governance Considerations

- Convert as early as possible to support accurate joins/filters

- Document transformations for transparency

- Use consistent type conventions across the organization

- Apply sensitivity labels appropriately — type conversion doesn’t affect security labels

Impact on Semantic Models

When creating semantic models (Power BI datasets):

- Data types determine field behavior (e.g., date hierarchies)

- Incorrect types can cause:

- Incorrect aggregations

- Misleading visuals

- DAX errors

Always validate types before importing data into the model.

Best Practices

- Always validate data values before conversion

- Use schema enforcement where possible (e.g., Spark schema)

- Avoid implicit type conversions during joins

- Keep logs or steps of transformations for reproducibility

Key Takeaways for the DP-600 Exam

- Know why data type conversion matters for analytics

- Be able to choose the right tool (Power Query / SQL / Spark) for the context

- Understand common conversions (text→numeric, text→date, boolean conversion)

- Recognize when conversion must occur in the pipeline for correctness and performance

Practice Questions:

Here are 10 questions to test and help solidify your learning and knowledge. As you review these and other questions in your preparation, make sure to …

- Expect scenario-based questions rather than direct definitions

- Identifying and understand why an option is correct (or incorrect) — not just which one

- Look for and understand the usage scenario of keywords in exam questions to guide you

- Keep in mind that if a question mentions unexpected calculations, broken joins, or filtering issues, always consider data type mismatches as a possible root cause.

Question 1

Why is converting column data types important in an analytics solution?

A. It reduces storage costs

B. It ensures accurate calculations, joins, and filtering

C. It improves report visuals automatically

D. It encrypts sensitive data

✅ Correct Answer: B

Explanation:

Correct data types ensure accurate aggregations, proper join behavior, correct filtering, and predictable sorting.

Question 2

Which Fabric tool provides a visual, low-code interface for changing column data types?

A. SQL Analytics endpoint

B. Spark notebooks

C. Power Query

D. Eventhouse

✅ Correct Answer: C

Explanation:

Power Query allows users to change data types through a graphical interface and automatically records the steps.

Question 3

What is a common risk when converting text values to numeric data types?

A. Increased storage usage

B. Duplicate rows

C. Conversion errors or null values

D. Slower report rendering

✅ Correct Answer: C

Explanation:

Text values that are not valid numbers may cause conversion failures or be converted to nulls, depending on the method used.

Question 4

Which SQL function safely attempts to convert a value and returns NULL if conversion fails?

A. CAST

B. CONVERT

C. TRY_CAST

D. FORMAT

✅ Correct Answer: C

Explanation:

TRY_CAST avoids query failures by returning NULL when a value cannot be converted.

Question 5

When should data types ideally be converted in a Fabric analytics pipeline?

A. At report query time

B. After publishing reports

C. Early in the transformation process

D. Only in the semantic model

✅ Correct Answer: C

Explanation:

Converting data types early prevents downstream issues in joins, aggregations, and semantic models.

Question 6

Which data type is most appropriate for calendar-based filtering and time intelligence?

A. Text

B. Integer

C. Date or DateTime

D. Boolean

✅ Correct Answer: C

Explanation:

Date and DateTime types enable proper time-based filtering, hierarchies, and time intelligence calculations.

Question 7

Which Spark operation converts a column’s data type?

A. changeType()

B. convert()

C. cast()

D. toType()

✅ Correct Answer: C

Explanation:

The cast() method is used in Spark to convert a column’s data type.

Question 8

Why can implicit data type conversion during joins be problematic?

A. It improves performance

B. It hides data lineage

C. It may cause incorrect matches or slow performance

D. It automatically removes duplicates

✅ Correct Answer: C

Explanation:

Implicit conversions can prevent index usage and lead to incorrect or inefficient joins.

Question 9

A numeric column is stored as text and sorts incorrectly (e.g., 1, 10, 2). What is the cause?

A. Incorrect aggregation

B. Missing values

C. Wrong data type

D. Duplicate rows

✅ Correct Answer: C

Explanation:

Text sorting is lexicographical, not numeric, leading to incorrect ordering.

Question 10

What is the impact of incorrect data types in a Power BI semantic model?

A. Only visuals are affected

B. Aggregations, filters, and DAX behavior may be incorrect

C. Reports fail to load

D. Sensitivity labels are removed

✅ Correct Answer: B

Explanation:

Data types influence how fields behave in calculations, visuals, and DAX expressions.