This post is a part of the PL-300: Microsoft Power BI Data Analyst Exam Prep Hub; and this topic falls under these sections:

Prepare the data (25–30%)

--> Transform and load the data

--> Convert Semi-Structured Data to a Table

Note that there are 10 practice questions (with answers and explanations) for each section to help you solidify your knowledge of the material. Also, there are 2 practice tests with 60 questions each available on the hub below the exam topics section.

In real-world analytics, data rarely arrives in a perfectly tabular format. Instead, analysts often work with semi-structured data, such as JSON files, XML documents, nested records, lists, or poorly formatted spreadsheets.

For the PL-300: Microsoft Power BI Data Analyst exam, Microsoft expects you to understand how to convert semi-structured data into a clean, tabular format using Power Query so it can be modeled, related, and analyzed effectively.

What Is Semi-Structured Data?

Semi-structured data does not follow a strict row-and-column structure but still contains identifiable elements and hierarchy.

Common examples include:

- JSON files (nested objects and arrays)

- XML files

- API responses

- Excel sheets with nested headers or inconsistent layouts

- Columns containing records or lists in Power Query

Exam insight: The exam does not focus on file formats alone — it focuses on recognizing non-tabular structures and flattening them correctly.

Where This Happens in Power BI

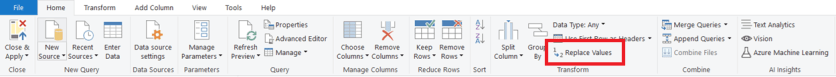

All semi-structured data transformations are performed in Power Query Editor, typically using:

- Convert to Table

- Expand (↔ icon) for records and lists

- Split Column

- Transpose

- Fill Down / Fill Up

- Promote Headers

- Remove Blank Rows / Columns

Common Semi-Structured Scenarios (Exam Favorites)

1. JSON and API Data

When loading JSON or API data, Power Query often creates columns containing:

- Records (objects)

- Lists (arrays)

These must be expanded to expose fields and values.

Example:

- Column contains a Record → Expand to columns

- Column contains a List → Convert to Table, then expand

2. Columns Containing Lists

A column may contain multiple values per row stored as a list.

Solution path:

- Convert list to table

- Expand values into rows

- Rename columns

Exam tip: Lists usually become rows, while records usually become columns.

3. Nested Records

Nested records appear as a single column with structured fields inside.

Solution:

- Expand the record

- Select required fields

- Remove unnecessary nested columns

4. Poorly Formatted Excel Sheets

Common examples:

- Headers spread across multiple rows

- Values grouped by section

- Blank rows separating logical blocks

Typical transformation sequence:

- Remove blank rows

- Fill down headers

- Transpose if needed

- Promote headers

- Rename columns

Key Power Query Actions for This Topic

Convert to Table

Used when:

- Data is stored as a list

- JSON arrays need flattening

- You need row-level structure

Expand Columns

Used when:

- Columns contain records or nested tables

- You want to expose attributes as individual columns

You can:

- Expand all fields

- Select specific fields

- Avoid prefixing column names (important for clean models)

Promote Headers

Often used after:

- Transposing

- Importing CSV or Excel files with headers in the first row

Fill Down

Used when:

- Headers or categories appear once but apply to multiple rows

- Semi-structured data uses grouping instead of repetition

Impact on the Data Model

Converting semi-structured data properly:

- Enables relationships to be created

- Allows DAX measures to work correctly

- Prevents ambiguous or unusable columns

- Improves model usability and performance

Improper conversion can lead to:

- Duplicate values

- Inconsistent grain

- Broken relationships

- Confusing field names

Exam insight: Microsoft expects you to shape data before loading it into the model.

Common Mistakes (Often Tested)

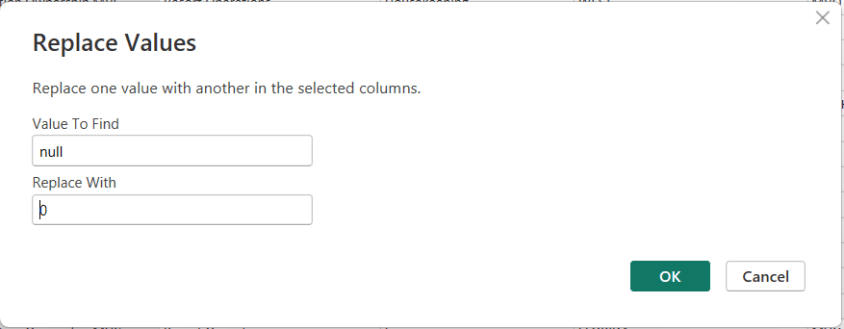

❌ Expanding Too Early

Expanding before cleaning can introduce nulls, errors, or duplicated values.

❌ Keeping Nested Structures

Leaving lists or records unexpanded results in columns that cannot be analyzed.

❌ Forgetting to Promote Headers

Failing to promote headers leads to generic column names (Column1, Column2), which affects clarity and modeling.

❌ Mixing Granularity

Expanding nested data without understanding grain can create duplicated facts.

Best Practices for PL-300 Candidates

- Inspect column types (Record vs List) before expanding

- Expand only required fields

- Rename columns immediately after expansion

- Normalize data before modeling

- Know when NOT to expand (e.g., reference tables or metadata)

- Validate row counts after conversion

How This Appears on the PL-300 Exam

Expect scenario-based questions like:

- A JSON file contains nested arrays — what transformation is required to analyze it?

- An API response loads as a list — how do you convert it to rows?

- A column contains records — how do you expose the attributes for reporting?

- What step is required before creating relationships?

Correct answers focus on Power Query transformations, not DAX.

Quick Decision Guide

| Data Shape | Recommended Action |

|---|---|

| JSON list | Convert to Table |

| Record column | Expand |

| Nested list inside record | Convert → Expand |

| Headers in rows | Transpose + Promote Headers |

| Grouped labels | Fill Down |

Final Exam Takeaways

- Semi-structured data must be flattened before modeling

- Power Query is the correct place to perform these transformations

- Understand the difference between lists, records, and tables

- The exam tests recognition and decision-making, not syntax memorization

Practice Questions

Go to the Practice Exam Questions for this topic.